Mise-Unseen: Using Eye-Tracking to Hide Virtual Reality Scene Changes in Plain Sight

Welcome to the second article from XR Researched (woo)! As a note, XR Researched will continue as a biweekly publication to ensure the content is as good as it can be. Happy reading!

This Week’s Paper

Marwecki, Sebastian, Andrew D. Wilson, Eyal Ofek, Mar Gonzalez Franco, and Christian Holz. 2019. “Mise-Unseen: Using Eye Tracking to Hide Virtual Reality Scene Changes in Plain Sight.” In Proceedings of the 32nd Annual ACM Symposium on User Interface Software and Technology, 777–89. UIST ’19. New York, NY, USA: Association for Computing Machinery.

DOI Link: 10.1145/3332165.3347919

Why this?

It’s been over a year since I was introduced to the supposedly amazing VR game Half-Life: Alyx, but I have not been able to try it. This is because it requires high computational power to support the game’s graphics and gameplay, so I would need to link a powerful enough PC to my Oculus Quest device to run it.

Rendering and computational cost has been one of the key limitations of commercial VR devices. While there have been rapid advancements in VR devices’ hardware, there is also a growing body of work that tries to work around these hardware limitations.

This paper is a great example of such and shows how our visual perception’s unique qualities can be exploited to trick the user’s mind to relieve the headset of unnecessary computations. Mise-Unseen has more than a couple of moving parts and potentially a few new terms/theories, so bear with me!

Summary

Design

Mise-Unseen is a system that decides when and where to create/rearrange objects within a user’s field of view to minimize the probability of the user noticing it. Its goal is ambitious: to prevent the user from noticing a change would mean that Mise-Unseen needs to not only ensure that users do not observe the change, but also that the user does not anticipate nor realize that the change has occurred. Here’s how they tackled each of these 3 challenges:

1. Preventing observation of the change

There are two steps that Mise-Unseen takes to prevent observation. At the base level, Mise-Unseen uses eye-tracking to add/rearrange objects outside of the user’s focus area (fovea). It further reduces the likelihood of detection by either applying visual masking techniques to the object or by changing the scene when the user is distracted.

The visual masking techniques that can be employed are: creating salient noise to distract the user from the change, reducing the strength of the change by using techniques like lowering the contrast or by using gradual fade, and by providing tasks to overload the attention of the users.

Distraction is measured by two models: the pupil diameter size and the number of saccades over time. A saccade is the rapid movement of the eye to quickly scan and map key features in a scene. Informed by these models, Mise-Unseen injects a change when the user is distracted.

2. Preventing recollection of the change

To prevent recollection, Mise-Unseen recommends making changes to objects/locations that minimize the violation of the user’s spatial memory. To do this, it creates a graph that represents the user’s spatial memory, connecting relevant objects to each other and to the user. The system increases the weights between user to object when the user looks at it, and the weights between two objects when the user looks at them in a row. The system decreases the weights when the user looks away.

Mise-Unseen uses this graph to calculate the difference between the weights before and after the proposed change. It then recommends the change that minimizes this difference to reduce the probability of recollection.

3. Preventing anticipation of the change

There are 3 ways that Mise-Unseen prevents the user from anticipating a change, and different applications can choose to use different methods. The simplest is by using random intervals to determine when to add a change. The second way is by leveraging user intent: once intent is made clear through sufficient dwell time from the user’s gaze, Mise-Unseen will implement the change. The third way is to use the graph mentioned above to discern user understanding and decide whether the user has pieced together a sufficient map of the space before Mise-Unseen continues to add changes to the scene.

Applications of Mise-Unseen

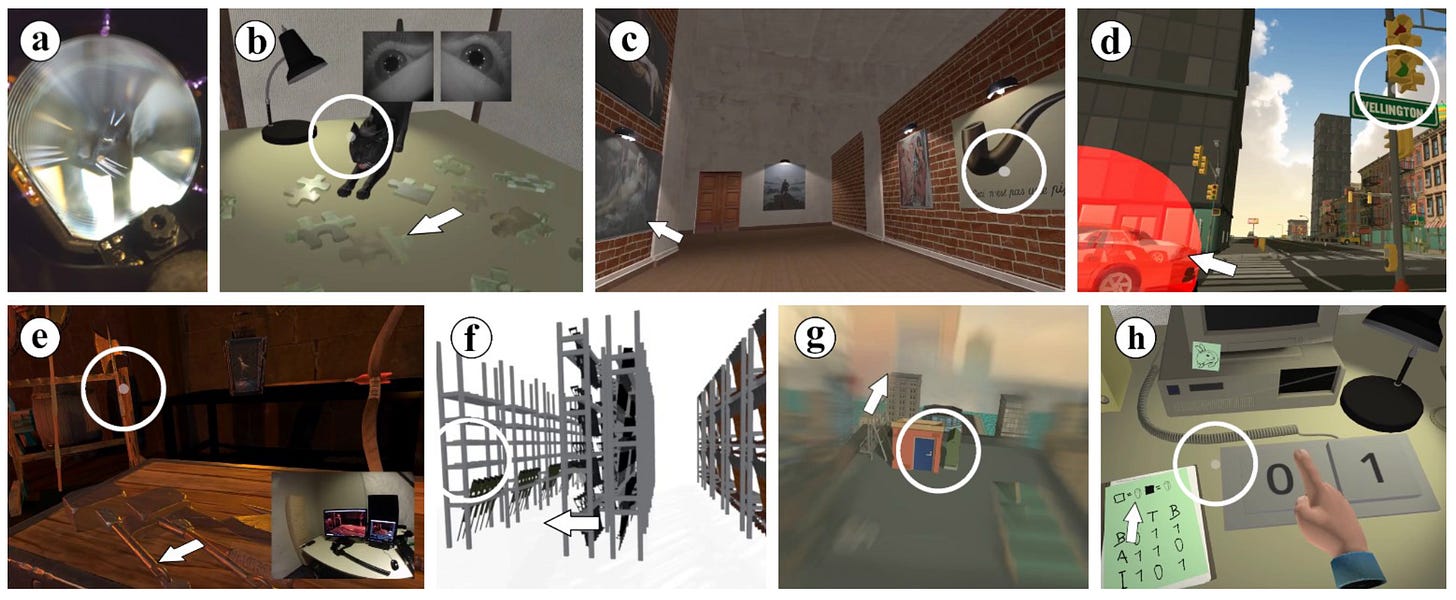

The authors created 7 applications to show the wide range of ways Mise-Unseen can be used. These span from customizing the scene based on the user’s inferred preferences, to minimizing motion sickness, to using one passive physical prop as haptic feedback for multiple virtual objects.

Results

Perhaps it seems almost too good to be true? The authors conducted two studies to evaluate its effectiveness, the first is a simple visual search task where participants had to identify newly added dots created with different visual masking techniques. This was to compare the effectiveness of each technique to the baseline and off-fovea conditions. In the second study, participants were randomly assigned several of the applications where they gave qualitative feedback and completed a 1-7 scale survey. Below are some of the results that I find interesting:

Detection rates when we add the visual masking techniques “low contrast”(0.53) and “add-a-task”(0.4) are significantly lower than the baseline(0.87) and off-fovea(0.76) conditions.

There is a correlation between cognitive load (through pupillometry data) and task performance, meaning changes are less noticeable with a higher cognitive load.

When a motion distractor is added, the participants cannot detect any changes.

Most of the applications had participants saying they at least slightly noticed the changes.

On a scale of 1-7, participants rated their enjoyment of the applications as jigsaw 5.2, hacker riddle 4.9, gallery 5.2, forge 6.5, and city 4.1.

Related Work

Their related work references several visual perception theories and masking techniques to inform the models used in Mise-Unseen, as well as applications that utilize such theories to perform other cognitive illusions on the user. Here are a few that I would highlight:

On visual perception theories and visual masking techniques

The concept of inattentional blindness is the failure to notice unexpected but clear changes because a person’s attention is occupied with another task/event/object. This is the famous gorilla-in-the-room paper. (Link)

Leveraging change blindness, where a human wouldn’t notice a change in moments of brief visual disruptions like during saccades or blinking, to reduce rendering times without compromising the perceived visual quality (Link)

A review of studies that evaluate the correlation between pupillometry (pupil dilation) and cognitive load. Found that pupil dilation can be an indicator of cognitive load. (Link)

Large changes can go undetected when they are made with minimal disruption, like slowly fading them in. Doing this was found to be more effective than change blindness, where you would flash a blank screen or add the change during a blink. (Link)

A review of computational cognitive models to map spatial memory (Link)

On applications

A breadth-first survey of using gaze in applications (Link)

On-the-fly remapping by predicting user intent based on gaze and hand motions so a passive prop can be reused for a diverse range of VR haptic feedback purposes (Link)

Redirected walking by changing the scene during moments of temporary blindness (saccadic suppression) (Link)

Technical Implementation

The authors used the VIVE Pro HMD and trackers to track the props and the participants’ heads and hands.

PupilLabs is the system used for eye-tracking. The eye-tracking system needs to be recalibrated for each user.

To determine what is within the user’s focal point, the authors factored in possible tracking errors and referenced a paper to determine the base angular threshold of foveal vision. (Link)

Covert attention is the phenomenon where the brain pays attention to an object without the eye gazing directly at it. To account for this possibility, the authors exploit the fact that covert attention is impossible within 300ms from the moment of a saccade. (Link)

Discussion

Why is this interesting?

What I find most intriguing is how this research exploits our visual system’s “flaws” to circumvent VR’s current limitations. A more obvious benefit to this is what I mentioned in the introduction, where conditional rendering can reduce the computational cost of VR applications.

It is also beneficial as VR becomes more tactile. We can, for example, use just one physical prop to represent multiple objects virtually. Depending on user intent inferred through gaze, we can move the virtual object to the position of the actual prop to minimize the number of physical props needed to be made.

I also think this research’s findings open up the design space for possible applications. If and when commercial VR headsets implement eye-tracking, we can reference this research to design unique gameplay mechanics, intent-based scene updates for productivity, and personalized applications based on the user’s preferences, among others.

Limitations/Concerns

Although Mise-Unseen’s potential is great, I do think we’d need to answer two questions to understand the extent of its benefits. The first is how large a change can be to have it still go unnoticed. I am optimistic about the answer to this since previous research in related work has shown that substantial changes can go unnoticed (like the famous gorilla-in-the-room paper).

Looking at the results, we can also see that even small changes can still be noticed. 5/6 visual masking techniques had a minimum of 40% detection rate. When interacting with the applications, participants also answered that they at least slightly noticed the changes. Is this result sufficient to ensure that we can inject unnoticeable changes within a user’s field of view? We’d need to figure out why the detection rates are so and how we can further reduce them.

Opportunities

I think there are a lot of components in this work that can be extended. For one, I would love to see work that extends sensors beyond eye-tracking or explores different attention models/visual masking techniques to reduce the detection rates.

The enjoyment results from the applications are also interesting to dissect. What caused the varied ratings between the applications? Are these visual masking techniques disorienting/uncomfortable? What happens when a user notices the change? Do they end up disliking the application more?