Loki: Facilitating Remote Instruction of Physical Tasks Using Bi-Directional Mixed-Reality Telepresence

Hello! It’s been a hot minute. I took a break from writing this newsletter due to personal circumstances, but I am now back and ready to go. During this period of time though, I’ve been looking for in-person classes to join. But instead of the singing classes in a room with a piano and a mic, group-based art history discussions, or even personal trainer sessions in the gym, I’ve found that many instructors now only offer online sessions. It seems like online learning is here to stay.

I can’t help but feel disappointed that all these classes are Zoom-based. I wanted the completely immersive experience that an in-person class brings - focusing on the teacher, discussing freely with other students, and practicing in a room equipped for the task. But since online learning does have its advantages, the question I’ve been thinking about is how we can bridge the immersion gap. This paper tries to do just that: it explores how AR/VR can enhance teaching physical tasks remotely.

Reading this paper helps us picture what remote collaboration could look like, question whether this is the direction we want to go, and identify points to keep and improve.

📄This Week’s Paper

Balasaravanan Thoravi Kumaravel, Fraser Anderson, George Fitzmaurice, Bjoern Hartmann, and Tovi Grossman. 2019. Loki: Facilitating Remote Instruction of Physical Tasks Using Bi-Directional Mixed-Reality Telepresence. In Proceedings of the 32nd Annual ACM Symposium on User Interface Software and Technology (UIST '19). Association for Computing Machinery, New York, NY, USA, 161–174. https://doi.org/

DOI Link: 10.1145/3332165.3347872

📸 Summary

Overview: The Loki System

Loki is a system designed to help remote instructors synchronously give one-on-one physical task classes to their students. It supports different features such as session recordings, mid-air annotations, and real-time 3d reconstruction to improve the remote class experience.

Teaching physical tasks

The authors introduced several theories on how to teach physical tasks appropriately. They highlighted 3 in particular: Fitt and Posner’s three-stage model of motor skill acquisition, cognitive apprenticeship by Collins et al., and Kolb’s experiential learning cycle. These theories suggest 4 requirements that systems should provide:

Observation - Provide a way for the learner to observe the teacher and vice versa

Feedback - Allow the teacher to provide feedback during or after the session

Abstraction - Provide a way to conceptualize the task, e.g., through text or annotations

Reflection - Allow the learner to review their task performance, e.g., by reviewing a recording of it with their teacher

Loki was designed to fulfill these 4 requirements.

🎨 Design

We can think of Loki as an upgraded Zoom that contains 3D spatial features. Each user (instructor or student) will use an HTC Vive headset and controllers to interact and will be surrounded by Kinect cameras.

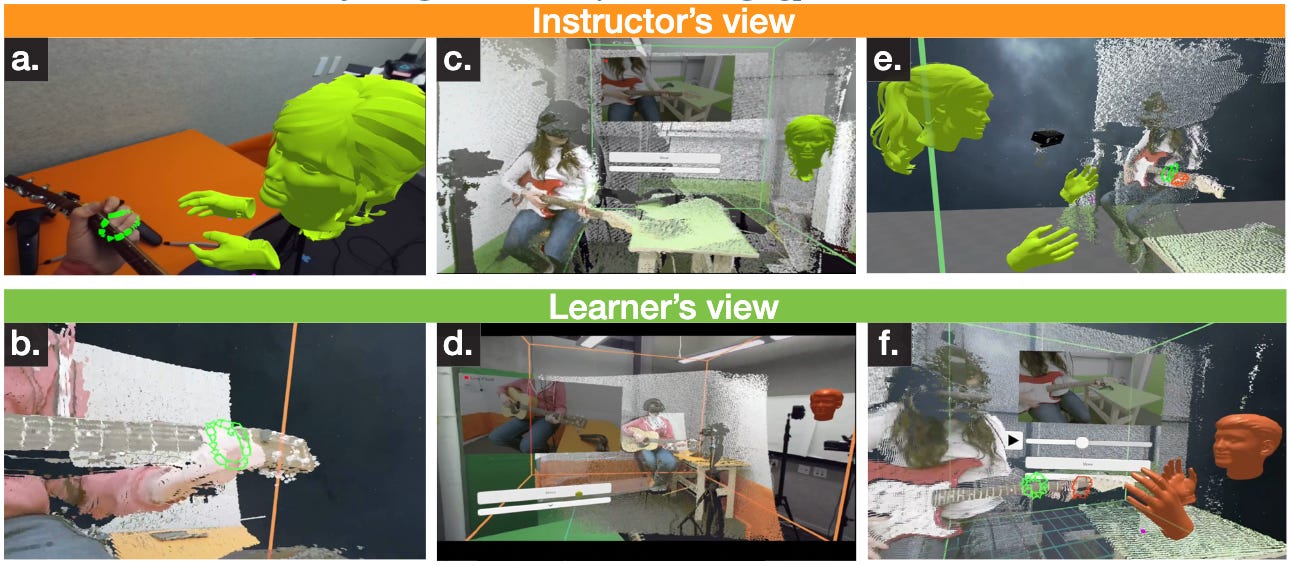

Loki is able to support 2 types of views: AR and VR. In the AR view, the user sees their own local space and other artifacts overlaid on the scene. In the VR view, the user sees the live 3D reconstruction of the remote user’s space, video, and menu. Instead of just audio and video, Loki has 5 interface primitives:

Audio - Standard real-time audio connection between the two users that also relays ambient sound.

Video - The local user can see 2D video captured by each Kinect camera.

Hologlyph - A live 3D reconstruction of the remote space from the Kinect cameras (see the image above). This can be seen in an immersive VR view or as an object in the AR view that can be rescaled, rotated, and repositioned using the controllers.

Avatars - When the local user is in AR view, and the remote user is observing the local user in VR view, the local user will be able to see the remote user’s relative position in their local space, represented as a head and hands.

Menu - Settings can be changed here.

To interact, instead of using a chat box or sharing a screen, Loki provides 2 methods:

Teleportation - A feature used to navigate the hologlyph. Apart from walking around the space, the user can also use their controller to point to a spot and teleport there. The user can also snap-teleport to the position of the Kinect cameras. This can be done in AR view to switch to the VR view from that specific point, or within VR view.

Multi-space annotations - Each user can create mid-air annotations. These annotations are color-coded to identify which user made them, and can be outlined/filled to show whether they’re made in the local space/hologlyph.

Loki is made to be a flexible system

Apart from supporting both AR and VR views, users can also move the Kinect cameras as they please. The 3D reconstruction and relative positions of each tracked object will be automatically updated based on the new Kinect positions. This allows a user to move the camera to a section to show richer detail or modify the area to be recorded.

Sample Configurations

Since each user can be either in VR or AR view, here are some sample configurations to show how the 2 views and additional components make for a more immersive, collaborative experience when teaching and learning a physical task:

Student Observing (student in VR, teacher in AR) - The student is able to closely observe the teacher performing the task from all angles and make annotations as they’d like. The teacher is also able to see the student’s avatar moving around their local space and can tell the student to view it from different angles.

Instructor Guidance (teacher in VR, student in AR) - The student performs the task, and the teacher can closely analyze the student’s performance and make notes.

Work Together (both in AR) - Both student and teacher are working on the task and can keep track of each other’s progress through the video and hologlyph.

Collaborative Review (both in VR) - Both student and teacher can rewatch a previously recorded session, analyze it from different angles, and make notes on the student’s performance.

🧠 Evaluation

The authors performed a lightweight exploratory user study. 8 participants were asked to learn a hot wire 3D foam carving task in a 30-minute session with one of the authors acting as the instructor. The participants then filled out a 5-point Likert scale questionnaire about the ease of understanding and usability of Loki’s features and modes. Here are some highlights:

The way participants used Loki was personal and based on how participants learn/comfort.

In different stages of learning, participants manipulated the hologlyph differently. For example, some liked to keep it small and to the side as reference material, while others preferred it scaled 1:1 to the side so they could easily see all the details.

Participants felt that the multiple components of Loki, such as video, annotations, and 3D models, helped them engage their partners better.

📑 Related Work

Telepresence and Collaboration

A large body of work has investigated remote collaboration and immersive telepresence. Some have pointed out the value of using a mixed-reality setup for remote collaboration. These work have proposed several extensions like mid-air annotations, identified the benefits of having access to multiple viewpoints in MR setups, and found that access to both digital and physical tools are important in creating a seamless remote collaboration experience. Here are some highlight references:

Physical task guidance system where the expert uses a pen and tablet to control a laser projection device in the remote location (Annotating with light for remote guidance).

A client-server software architecture design for in-person, multi-user AR (“Studierstube”: An environment for collaboration in augmented reality).

Avatar design for remote collaboration between an AR and VR user. The avatar of the VR user resizes in the AR user’s view so they can constantly keep track of the VR user’s avatar. They found that this adaptive avatar significantly improved social presence and the overall MR collaboration experience (Mini-Me: An Adaptive Avatar for Mixed Reality Remote Collaboration).

Real-time spatial capture

Recent work to capture the user’s scene in real-time enabled new interaction designs that allow the user to interact with their space. This also enables real-time 3D meshing and the point cloud rendering that Loki uses. This field has been previously explored in our Holoportation post, so you can reference that for more details.

Physical task guidance systems

As mentioned above, systems that support teaching physical tasks should fulfill all 4 requirements; however, the authors did not find any prior work that does so. Most prior work on physical task guidance systems focused on providing experiential learning and opted to provide automatic feedback to the user. This form of feedback is found to be rather coarse. Other works in using AR/VR systems for physical task guidance focused on the psychomotor aspect.

Specifically, they identified 2 limitations in prior work: fixed configurations for the learner and another fixed display configuration for the instructor, and no symmetric affordances between the learner and instructor. Here are some papers that I found interesting to read through:

AR mirror training system for physical movement sequences that tracks the user’s movements and compares it to stored data (YouMove: enhancing movement training with an augmented reality mirror).

A review of articles that involve AR simulation for laparoscopic surgery (What is going on in augmented reality simulation in laparoscopic surgery?).

VR tutorial system to teach users 3D painting (TutoriVR: A Video-Based Tutorial System for Design Applications in Virtual Reality).

💻 Technical Implementation

The Loki System

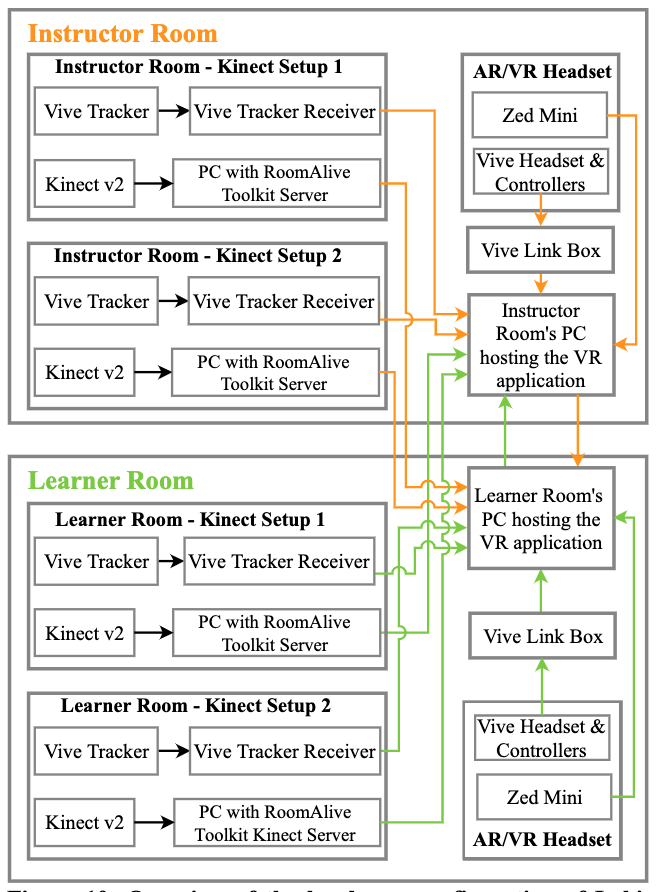

Each user has an identical setup using an HTC Vive, ZED Mini, and Kinect depth cameras. Here’s a full breakdown of the system setup and devices used:

Rendering MR

Since HTC Vive is a VR headset, rendering VR does not need additional setup. To render AR, the authors strapped on a ZED Mini camera to the headset and streamed the image to the headset.

Rendering the Hologlyph

HTC’s IR emitters are mounted in each setup and used as references to track the position of the headset, controllers, and Kinect cameras.

The Kinect camera positions are used to dynamically calibrate the 3D reconstruction feed.

Instead of rendering a mesh of the scene, the authors rendered the raw point clouds to maintain scene granularity and minimize erroneous distortions.

The authors created a custom shader to render these point clouds that update at 30fps when in VR view and at 10fps when in AR view.

Communication

The authors created a custom TCP/IP Unity plugin to send data frames between the 2 PCs.

Each data frame contains information on the user’s head and controller poses, current mode, tracked Kinect positions, and metadata used to synchronize the 2 systems.

Audio is transmitted through an IP telecom system.

Separately, Kinect data is transmitted using RoomAlive Toolkit’s KinectV2 Server through a router. Data capture, compression, and decompression are all handled by the toolkit.

Synchronizing the space

Only 1 PC stores the recorded Kinect data and video. During shared playback, the stream of data is serialized in a binary file on a shared network drive. When the file is ready to be played, a flag is updated to synchronize the playback.

To synchronize the annotations made by and the position of the remote user in the hologlyph to the environment of the local user, the relative transform of the Kinect to the tracked object is used to compute the respective position.

🔑 Discussion

🧐 Why is this interesting?

There were 2 points that intrigued me about this paper. The first is its deep dive into understanding the key components of learning a physical task. Loki’s design intimately references these learning theories and thus makes for a more grounded application.

The second is how it supports multiple display types and interaction methods for the instructor and student. Most related work I’ve encountered only focused on one configuration set and thus usually has narrow use cases. For Loki, I can already envision how this setup can be useful for remote instruction of physical tasks.

👾 Limitations/Concerns

A clear limitation to this form of setup is the amount of hardware and processing power overhead it requires. Although it shows the potential that immersive remote collaboration brings, it’s still important to ask whether or not this is the way to go. Is this a setup that we want? If not, what devices should we remove, and how can we improve?

The paper itself also did not do an in-depth user study to understand whether or not users benefited from using Loki. We would need more data to see how beneficial Loki is compared to existing remote collaboration methods.

Last but not least, this setup is still unrealistic for the average use case. More work needs to be done to reduce costs, setup time, and hardware overhead.

🥳 Opportunities

I would love to see new interaction methods to help users remotely collaborate. Although mid-air annotations and shared media assets are helpful, I think that there is still room to explore interaction methods unique to MR setups.

As I read this paper, I also find myself continuously asking the question: is this the best configuration? I wonder whether there are other ways to achieve the same level of immersion and seamlessness with less hardware or with a different approach entirely.