“In VR, everything is possible!”: Sketching and Simulating Spatially-Aware Interactive Spaces in Virtual Reality

The idea of using VR to simulate different experiences is not new. With VR, we can simulate futuristic/unsafe scenes without any space or technical restrictions, all in a controlled environment. This paper takes the idea of running simulations in VR into the context of rapidly prototyping interactive spaces (spaces equipped with IoT and interactive devices like projectors and tablets).

Interactive spaces themselves are becoming more popular, poised to help museums create novel experiences, make team collaborations more effective, and generally make spaces more enticing to visit. This paper’s use case for simulation in VR highlights several advantages and disadvantages that this approach entails.

This Week’s Paper

Hans-Christian Jetter, Roman Rädle, Tiare Feuchtner, Christoph Anthes, Judith Friedl, and Clemens Nylandsted Klokmose. 2020. "In VR, everything is possible!": Sketching and Simulating Spatially-Aware Interactive Spaces in Virtual Reality. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI '20). Association for Computing Machinery, New York, NY, USA, 1–16.

DOI Link: 10.1145/3313831.3376652

Summary

Design

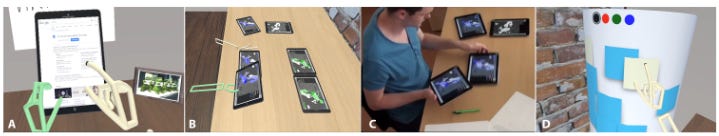

The authors created a design tool that allows designers to prototype and experience interactive spaces in VR. They support this by simulating key technologies commonly found in interactive spaces such as motion tracking, unusual form factors like large touchscreens, and networked devices. Users can add any number of these simulated technologies and combine them to create a cohesive experience virtually.

The authors determined 5 requirements that this tool should have, which are: support for direct manipulation of virtual apps and objects, ability to include interactive components, ability to simulate spatially-aware tracking systems, support for real-world and futuristic devices, and close integration of redesign and testing. These requirements were formulated based on the authors’ previous work in designing interactive spaces.

I will go over this tool’s design in more detail:

1.Direct Manipulation of Apps and Objects

In early iterations of the system, the test users felt that it was cumbersome to interact with the simulated space using controllers and asked for more direct interaction methods. Thus, to interact with the space, the users now use their hands as input: they can grasp a virtual object to grab them, interact with touch screen devices using their fingers, and do free-hand drawing on surfaces. To compensate for the lack of tactile feedback, the proxy hand turns green when a user is successfully grabbing an object. The users also physically walk around in space to navigate the VR world.

2.Integration of Interactive Components

Since an interactive space would often include applications on devices (e.g. pressing buttons on an iPad application would affect the projector’s display), it is important for the system to simulate these applications whilst supporting rapid iterations of them. To fulfill these two requirements, the authors implemented a mechanism that allows any mesh to display a web browser in VR. In this way, designers can easily modify the web application outside of VR using any IDE they are comfortable with and load the exact application within VR. Web applications are a good compromise since they are popular for multi-device application development.

3.Simulation of Spatially-Aware Tracking Systems

For this system, the authors implemented two types of spatial tracking systems: OptiTrack, a commonly-used and commercially available tracking system, and HuddleLamp, a research prototype whose source code is publicly available on GitHub. These tracking systems allow users to design more complex and intuitive interactive spaces. Each tracking simulation has parameters that allow a user to simulate jitter and adjust the tracking frequency.

4.Real-World and Futuristic Devices

One of the key advantages of simulating in VR is that it frees designers from physical constraints. To explore this advantage, the authors include several futuristic devices such as cylindrical and spherical touch screens that the user can experiment with.

5.Close integration of Redesign and Testing

The system was built to have a “use mode” and a “design mode” so that the user can rapidly modify and test their designs. In the “design mode”, the user can scale objects, turn the gravity for any object on/off, and switch the object’s collision detection (which determines whether it can be grasped). The user can also use both their hands to grasp each side of an object and pull their hands apart or push them together to increase/decrease the object’s size. Objects are scaled uniformly.

In the “use mode”, users can draw on any canvas, interact with all the browsers on the devices, and grasp objects that have their collision status turned on. From related work, the authors also found that sketching is an important process for designers to conceptualize their designs. Thus, the system also supports free-hand drawing. In the “use mode”, the user can see spheres of color next to their hand when they look at their palm. The user can then use the other hand’s finger and dip it into the sphere to select a color. This finger can be used to draw on any surface.

Results and their interpretations

The authors wanted to observe and understand how designers would use this system, hear their opinions on using it, and how it affected their design process. They conducted a three-part study with 12 participants (each with 2-12 years of practical design experience). In each part, the participants were asked to think aloud. The authors also conducted a concluding interview.

After each day of running studies, the authors clustered the observations to organize ideas. They found in hindsight that the clustering scheme was saturated by the 6th participant. Here are some of the opportunities and challenges that they observed:

Opportunities

Participants were immersed in the system and would avoid colliding with virtual objects such as tables.

Participants often tried to experience size relations (for example, if the proportion of a sketch was reasonable) and the visibility of displays (for example, checking whether a display would be blocked at certain angles).

They also tried to understand the ergonomics of the objects they added (for example, whether all corners of a virtual touch screen are reachable).

Participants would first play and understand the different objects available in the system before focusing on distinct scenarios.

Participants also used VR to act out the design scenarios instead of creating multiple states and transitions. This allows for more natural ways of demonstrating the intended user interactions.

Challenges

The design tool and set of available assets affected the resulting designs created because some of the ideas were tedious to implement or impossible to explore using the setup.

The system was a bare-bones version of a scene editor and did not have functions such as delete, copy, or paste.

Related Work

The authors referenced multiple design tools ranging from VR-based design tools, tools to create multi- and cross-device interactive spaces, and tools for proxemics and spatially-aware interactions. Here are some of the highlights:

Example of using VR to simulate a full-surround augmented reality system (Evaluating wide-field-of-view augmented reality with mixed reality simulation).

A new design workflow that uses 3D sketching and VR simulation to help designers collaboratively explore and develop new automotive designs (Collaborative Experience Prototyping of Automotive Interior in VR with 3D Sketching and Haptic Helpers).

A book that explains why sketching is a key component in creating new products and systems (Sketching user experiences: getting the design right and the right design).

Paper that evaluates the importance of different sources of depth cues (such as relative size and binocular disparities) on how people perceive space (How the eye measures reality and virtual reality).

A GUI builder to support interactive development of cross-device user interfaces (Interactive development of cross-device user interfaces).

A modular hardware platform allowing users to link mobile devices together to evaluate their ergonomics and interaction design (SurfaceConstellations: A Modular Hardware Platform for Ad-Hoc Reconfigurable Cross-Device Workspaces).

A study to understand key challenges designers have when creating multi-device experiences (Understanding the Challenges of Designing and Developing Multi-Device Experiences).

WeSpace is an interactive space designed for collaboration between scientists. This is an example of how prototypes of interactive spaces were developed and showcased the advantages of an interactive space (WeSpace: the design development and deployment of a walk-up and share multi-surface visual collaboration system).

Technical Implementation

Several notable implementation details are as follows:

Used Unity to implement the system onto a HTC Vive with a Leap Motion attached for hand tracking.

To run web browsers on meshes, they used ZenFulcrum’s embedded browser.

To simulate multi-touch input, the authors:

First extended the browser component to support the PointerEvent API and TouchEvent API.

They then added a game object on each fingertip.

Each game object controls a touch proxy in a gravity-like motion by applying velocity and angular velocity to it. The touch proxy stays on the browser’s surface.

Each browser has a collider that defines the volume that is interactable.

Each touch proxy has a collider. When this collider collides with the browser’s collider, a touch is registered.

When a touch proxy is moving while colliding with the browser’s collider, the system calculates the distance between the proxy to the nearest point on the browser’s mesh. Different events (such as scrolling up or down) are triggered based on this distance.

Discussion

Why is this interesting?

I think this paper showcased several key advantages that simulating experiences in VR can bring (such as the visualization of space and how being immersed in the scene encourages exploration). As VR headsets continue to improve, I also foresee more refined editors (such as more advanced functions to improve design workflow) being created and a wider variety of experiences (maybe smart homes of the future?) being designed.

Limitations/Concerns

There are several limitations that this approach brings. Firstly, since their hand tracking is vision-based, the hands have to be in view in order to be tracked, hindering eyes-free interactions. I also wonder how accurate the hand tracking can be and whether this affected the usability of the system. The display resolution is also much lower than the resolution of real-world devices today, which affects text readability in simulated applications. Although the VR experience is visually similar, the physical experience is not always so (for example, the headset weighs down on the head), which can affect the user’s performance for more rigorous user experience evaluations.

Opportunities

I think this approach can be further extended by supporting remote collaboration so multiple designers can design the space together and perhaps more interestingly, multiple users can experience the space together. Of course, additional iterations can also be made to improve the editor’s workflow. It would also be interesting to explore how designers can design their own futuristic devices through sketches (as inspired by prior papers mentioned in this newsletter).